Understanding Student Perception and Performance with Khanmigo: A Mixed-Methods Approach AP Research

Introduction

Artificial Intelligence (AI) is transforming classrooms around the world by offering students a new approach to learning. Because of this the educational platform Khan Academy released its own AI model, Khanmigo, as the market's only student-focused AI model. Currently, Khanmigo is being piloted in over 100 school districts, one of which is Palm Beach County (PBC), to support students in all their academic challenges; with AI being such a new tool, the conversation surrounding it focuses on its potential (Cooper, A et al., 2024). Because of how new it is, there is limited research on how students perceive it and benefit from it, especially high school students. Available research is focused on college students and teacher perceptions, leaving a gap in understanding how AI impacts high school students. The lack of current research on Khanmigo’s effect on high school students is alarming, considering Khanmigo's increasing use in school districts. Using 8 participants for this study, four were assigned to the AI group, and the others were assigned to the Non-AI group. The first part of the study consisted of an eight-question test, so quantitative data from student scores can be collected and interpreted. Then, a one-on-one interview consisting of open-ended questions was conducted to collect qualitative data about the participants' views on their experience during the test. Then, a deductive thematic analysis was utilized to group similar and contrasting responses collected during interviews for predetermined themes. Once the research had been conducted, the results showed that Khanmigo did not give students any advantage over non-AI users. This research found a new understanding of the themes: Strategies Used, Confidence Levels, Understanding of the Material, and Time Management. Future researchers could build on these themes by using a larger sample of students from multiple schools, allowing results to reflect diverse academic environments.

Literature Review

Artificial Intelligence (AI)

"Can Machines Think?" Alan Turing, a leading computer engineer in the 20th Century, created the concept of AI out of the idea of whether machines would ever have the capacity to think. Turing identified that a machine that can talk would be called AI. From there, he further asserted that AI would refer to a simulation of human traits from computers or other machinery; the goal of AI was to create a machine fully able to be human in how it acts and thinks (Xu et al.,2021, p. 2). Since Turing's early findings, AI has evolved into complex machinery and has been implemented in multiple fields, such as education, healthcare, finance, transportation, and agriculture(California Miramar University, n.d.). Today, AI is widely used in schools to tutor students, promoting a deeper understanding of content that might not have been understood the first time it was seen. These AI systems, such as Khanmigo, ChatGPT, and Photomath, give instant and constructive feedback to benefit students' needs. However, these advancements in AI have been met with little research to determine its efficacy in stimulating engaging content that allows for deeper understanding in students.

AI in Education

AI in education has played an increasingly significant role in student learning in recent years. A research study conducted by Chun-Hung Lin et al. analyzed the impact of AI Literacy after students of various majors received help from AI on STEM related tasks (2021). The study took students of various majors, STEM and Non-STEM, and measured their AI literacy before and after the class. The results showed that once the class was completed, all students increased their AI literacy skills, indicating that they may apply AI to benefit their future endeavors in their respective fields. AI serves two leading roles in education: Intelligent Tutoring and Machine Learning. AI has most commonly been used as a means of tutoring. Intelligent Tutoring Systems (ITS), first introduced in the 1980s, are the earliest form of modern-day Chat box help (Murphy,R. F., 2019). ITSs are designed to provide students with personalized assistance in learning and mastering content. The other role of AI in education is Machine Learning when AI builds a prediction model based on the data learned from the student. It uses this data to develop predictions about students based on their actions and performance. For example, A high school student is sitting at home doing homework for their AP Calculus AB class and does not understand a topic. Instead of struggling, AI allows them to seek instant and comprehensive help to their benefit. Powered by machine learning, AI does more than answer questions; it analyzes past interactions, identifies patterns in their mistakes, and predicts which concepts they will likely struggle with next. Over time, AI can adapt its explanations accordingly, offering personalized teaching to students.

AI and Problem-Solving

With continued integration into education, AI's impact on students' problem-solving abilities raises concerns about student safety. AI is initially designed to enhance learning through personalized content. However, recent research has proven its ability to cause dependency. Wang & Li found in their study that university students are more than ever reliant on AI (2024). However, they expressed that while students in STEM majors are more likely to use AI to help solve complex problems, students in other majors are just as likely to use it solely as an information provider. This raises an ethical dilemma: By intentionally passing their work to AI, students may unintentionally weaken their ability to think critically and solve problems independently in the future. Cognitive Load Theory (CLT) explains there are limitations to memory that affect learning and problem-solving. CLT suggests that for instruction to be adequate to students, it should minimize unnecessary cognitive demands to optimize the amount of information students learn. However, it also emphasizes that instruction is only effective if the content is engaging; if it is boring, students are likely to disengage (Sepp et al., 2019). When considering AI as an Intelligent Tutor, it has the potential to create an actively engaging environment that aligns with CLT. An issue, though, arises regarding AI's effect on students' use of physical paper as a mode to express their thoughts and work on problems. When used as an intelligent tutor, AI is the instructor who guides students through problems rather than requiring them to work independently. This is intended to make students feel less obligated to express their thoughts on paper and instead rely on the AI's work as their own (Wang & Li, 2024). This further situates students in contradiction to CLT by not providing engaging instruction to promote a strong and memorable connection with the material. Furthermore, there are risks of not using paper, as it and writing benefit students. It is the most effective way to study and retain information, as it avoids distraction while maintaining student focus (The Benefits of Paper vs. Digital Learning, 2019).

Psychological and Cognitive Effects of AI

AI's impact has extended beyond knowledge acquisition within the field of education. Metacognition is defined as the ability of someone to monitor, regulate, and control their own cognitive processes, otherwise known as "thinking about [their] own thinking" (Columbia University, 2019). Metacognition allows us to recognize gaps in our knowledge and work to find ways to learn new information (Sidra & Mason, 2024). However, AI's implementation in education weakens metacognition when not adequately regulated. This issue stems from AI's inability to self-monitor, leaving its users to use their critical thinking skills to evaluate the content and ensure its accuracy. Due to its design, AI is trusted to provide quick and reliable answers, reducing the need for students to monitor the output actively. In return, this may cause students to adopt a passive learning style, increasing their likelihood of developing a dependence on AI. Initiating AI literacy training can mitigate these risks. For example, when students are taught to approach AI actively, checking content for accuracy and making informed decisions, they are more likely to avoid weakening their metacognitive skills (Sidra & Mason, 2024). That way, rather than fostering dependence, AI can be a tool that enhances student learning.

The Gap

After analyzing research regarding AI's implementation in education, apparent gaps were identified, the first of which is the limited research conducted on high school students. Additionally, there was a commonality in the lack of statistical data, meaning all the information was based on assumptions or trends. These gaps motivated me to research Khanmigo, a platform frequently used in high schools. Despite its increasing adoption in schools across the United States, no research has validated the usefulness of Khanmigo specifically for student learning. Instead, the available research on Khanmigo evaluates the program on a scale of how much it helped the researcher Shamini Shetye master French (2024). Because the study focuses on language learning and reflects the experience of a highly educated adult, it fails to translate to current students with varying learning needs. Studying within the PBC School District allowed me to study the effects of AI in education, as the district has mandated Khanmigo's use in all K-12 classrooms. The importance of researching Khanmigo is demonstrated by the need to assess its effectiveness in enhancing student learning. Therefore, this study aimed to evaluate students' comparison of the accuracy of students’ scores and their perceptions of Khamigo as it pertains to its usefulness, accuracy, and impact on their learning compared to a control group that did not use Khanmigo. The deductive thematic analysis of the student's perceptions will work by asking students questions that will expose opinions about the test and its use. The information gathered in this study will provide helpful information on how students perceive its tools and how they help them complete and understand them effectively and in a timely manner. Receiving input from PBC students will provide insight into whether or not the students are benefitting from the district's investment in Khanmigo.

Method

Participants and Sampling

For the study, I interviewed eight high school students, four of whom will be interviewed after using Khanmigo and the other four without using Khanmigo. When selecting participants for the sample, gender was not considered as this study does not aim to explore gender-based differences in problem-solving or perceptions of Khanmigo. However, a requirement to be in the sample is that a participant was or is currently enrolled in an AP Math and AP Science Class so that the questions given could be equally challenging to everyone, maintaining fairness across participants of both groups.

High school students were selected as the sample because the limited research focused on the perceptions of college students and teachers, which is where my gap in understanding the perception of high schools, particularly PBC students, came from. This age group also tends to demonstrate high digital literacy skills, making them well-suited for using AI tools like Khanmigo (Holm, 2024).

Based on my experience with Khanmigo, I hypothesized that students who use Khanmigo would report higher accuracy on the test, but lower engagement and comprehension with the material. This suggests that Khanmigo will encourage reliance and reduce active learning. For the group answering the questions without Khanmigo, I hypothesized that they will report higher engagement with the material, as they relied solely on their own problem-solving and critical thinking skills.

Materials and Stimuli

The stimuli questions provided to participants, along with the follow-up interview questions for each group, are included in Appendix A-J.

Before finalizing the stimuli question, I tested Khanmigo to understand how it responds to various problem types. I test Khanmigo by making multiple questions per problem type allowed; therefore, I would be unable to anticipate specific answers and be able to evaluate Khanmigo authentically. This was most beneficial as it allowed me to mimic the testing conditions of a participant so I could figure out the themes I would want to test in the thematic analysis. These interactions with Khanmigo helped finalize a fair and balanced question set that would both challenge participants equally and generate meaningful responses that contributed to the new understanding. The final stimuli set given to participants consisted of eight questions: four math questions (Algebra, Trigonometry, Pre-Calculus, and Probability) and four science questions (Biology, Chemistry, Physics, and Earth Science).

The additional materials given to each participant were consistent, with the exception that the AI group got access to a computer only with Khanmigo available to them, while the non-AI group completed the test independently, with no external aid. All participants were given a pencil and lined paper to write down their work if needed. As research from the Paris Corporation notes, writing on paper is one of the most effective ways to stimulate and retain information (“The Benefits of Paper vs. Digital Learning,” 2019). While participants were encouraged to use the paper during the test, written work was not evaluated nor considered when determining results to keep the focus of the study remaining on participants’ test performance and perceptions. This decision would allow for a realistic representation of student engagement and problem-solving when using Khanmigo.

Procedure

The experiment began by randomly assigning each participant to a group. Participants blindly selected one of two identical tests, they weren’t able to see if they were labeled ‘AI’ or ‘Non-AI,’ to avoid any bias. Once four participants were assigned to one group, the remaining participants were automatically assigned to the other group to maintain equal group sizes. Then, each participant was instructed about the ethical parameters in place and given their supplies. The total test time for the eight questions was twenty minutes, giving two and a half minutes per question. Following the test, students moved on to a one-on-one interview to document their experiences, thought processes, and reflections. Interviews were recorded and transcribed for analysis (See Appendix C-J).

All participants were asked the same six open-ended questions during the interview, regardless of testing conditions. Individually, each of the six questions correlated to a specific theme. To understand how participants approached the problems, the first question focused on identifying their problem-solving strategies. The second question studied their confidence and self-efficacy in order to explore how participants felt about their ability to answer the questions. Next, to identify the gaps in knowledge or education, the third question identified areas of struggle in the test. The fourth question examined whether the test promotes a more profound understanding or just effortlessly completes a task, with or without AI. Then, the fifth question explored time management to find if AI improves or hinders the participant's ability to answer questions on time. Finally, the sixth question collected participants' overall attitudes toward AI compared to those without AI, reflecting their experiences and preferences (See Appendix A).

Afterward, each group received an additional condition-specific question. The non-AI group was asked about their motivations and external pressures to determine whether knowing they were being measured against the AI group influenced their performance. The AI group was asked to reattempt a similar problem without Khanmigo to evaluate their independence and knowledge retention. These participants would be given two and a half minutes to answer this additional question, and the same time would be given to answer one question during the test.

Ethical Consideration

Because my project required me to interview students, I received permission from my AP Research teacher. Before the tests and interviews started, all participants were informed of the study’s purpose and verbally consented to participate. Additionally, they were told they could skip any question or stop at any time without consequence if they felt uncomfortable. To ensure confidentiality, no identifying information will be revealed about the participants, and the pronouns used will be they/them. This study followed ethical standards for research involving human subjects and posed minimal risk to participants.

Data Analysis

The quantitative data consisted of test scores from each participant. The scores from the participants of each group were averaged together and then compared to determine if one group performed better and by how much. A t-test and Mann-Whitney U test were conducted to confirm the accuracy of the results. Using both a t-test and a Mann-Whitney U test was appropriate to check for statistical significance, especially since the normality of the data can be uncertain. The t-test evaluates whether the means of the AI and Non-AI groups are significantly different under assumptions of normal distribution. At the same time, the Mann-Whitney U test compared the ranks of the data, making it useful if the normality of the t-test was not met.

The qualitative data collected through the interviews were analyzed through a deductive thematic analysis, a way to analyze data in a structured method with predefined themes (Deductive Thematic Analysis | Definition & Method, n.d.). I identified repeated phrases, patterns, and contrasting views within each theme to interpret how students perceived their experiences with or without Khanmigo. A thematic analysis was the most appropriate for my study because it allowed me to better understand each participant’s perspectives beyond their test scores.

Results

Once I conducted all eight tests and interviews with each participant, I was left to determine whether there was a correlation between AI and Non-AI group. Since I used a Deductive Thematic Analysis meaning certain themes were assigned to each question, I recorded data by identifying any patterns, agreements or disagreements in each group. Additionally, I evaluated each participants’ test scores using an aswer key to indicate statistical significance based on each group’s average result.

Participant Test Scores

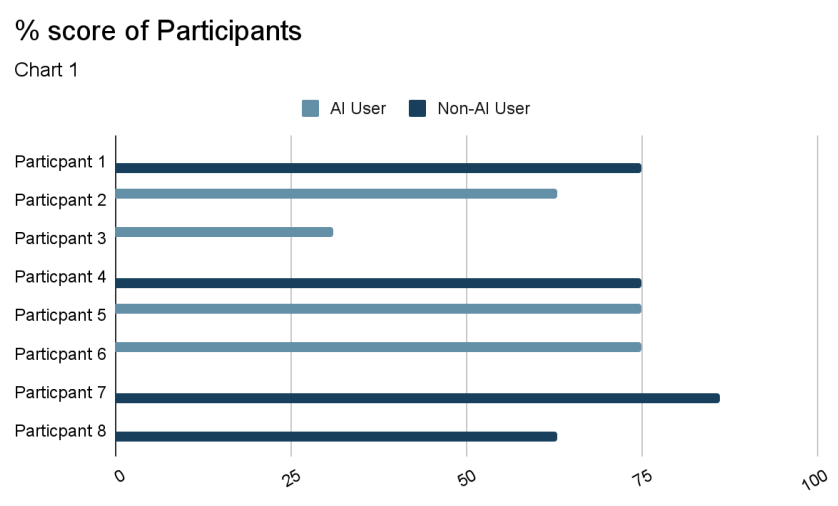

The test scores for each group were equally distributed with no group significantly outperforming the other (Chart 1). The group with the highest average score was the Non-AI group with an average score of 74.75%, in comparison the average score for the AI group was lower at 63.67%. The highest score from the entire study was 86% coming from the Non-AI group, and the lowest score was 31% coming from the AI group. The t-test showed that there were no significant differences between the two groups since p = 0.224, and then the t-test was further confirmed by a calculation of the Mann-Whitney U test,at p = 0.299. Since both tests had p-values greater than 0.05, the null hypothesis could not be rejected, indicating there was no statistically significance between the AI and non-AI groups.

Theme 1: Strategies Used

When evaluating the similarities between each group's responses, I found Non-AI participants demonstrated a detailed approach to problem-solving, using strategies like brain dumps and multiple rewrites as a way to guide their solving processes. Participant 1, for example, broke down the questions multiple times to guide their thinking (See Appendix, C Question 1). In contrast, AI users relied on the AI from the start of the examination. For instance, participant 3 stated that their strategy was to use AI from the start by copying each question and letting the AI give them essential information about it (See Appendix E, Question 1).

Theme 2: Confidence Levels

In terms of the confidence levels of the participants, no participant felt full confidence in their answers. The non-AI group demonstrated relative trust in their personal reasoning, depending on their familiarity with the concept at hand. Even when they could not recall specific strategies, non-AI participants still recognized the questions and applied problem-solving techniques effectively. For the AI group 3⁄4 of the participants demonstrated using AI however they still did not feel confident. Participant 5 stated that “[they felt] tricked with the wording of the questions and the AI did not help much” (See Appendix G, Question 2).

Theme 3: Most Challenging Problems

When analyzing which questions were the most challenging, there were clear differences between the AI and non-AI groups. The non-AI group shared that the most challenging questions for them were the math questions. Most commonly, Question 3, which dealt with Pre-Calculus knowledge based on transformations, was described as the hardest Both participants 1 and 4 said it was the hardest, while Participant 8 said they were confused on all the questions. Participant 7 claimed Question 4 was the hardest, with their reasoning being that it was about probability. None of the AI users agreed with Participant 7, despite only 2 participants getting the question correct. The AI users had varying opinions about which questions were the most challenging to answer.

Theme 4: Understanding of the Material

Evaluating the non-AI group showed that participants claimed to have a high understanding of the material, even when they didn’t know where to start. As highlighted by Participant 4, the more they attempted to rework the question, the closer they got to the correct answer, as they began to recognize the underlying concept (See Appendix F, question 4). In complete contrast, all 4 of the AI group participants claimed that there was no significant benefit to their ability to understand the material. Participant 3 even stated that the test worsened their understanding, as they couldn’t figure out any of the questions independently (See Appendix E, Question 4).

Theme 5: Time Management

Time management differed significantly between the groups, with AI users frequently feeling rushed, and non-AI participants finishing comfortably with time to review their answers. The non-AI showed no issues with time management as most either finished on time and were able to attempt each question. Participant 7 who scored the highest of all participants shared that they thought 20 minutes was more than enough time to answer the questions (See Appendix I, question 5). Interestingly, the AI group claimed that using Khanmigo was the reason their timing was messed up. These participants all shared the feeling of being rushed which ended up impacting their accuracy. Additionally, participant 5 said the AI took a very long time to load each question which slowed them down further (See Appendix G, question 5).

Theme 6: Would they use AI Again?

The most important question to evaluate from the participants was whether they would prefer to use AI or not for future assignments. When it came to the non-AI group they saw the AI as a vital tool that would allow them to start questions off on the right track, meaning they want Khanmigo ready to use as a supplemental tool only to help them when they are confused. Participant 1 stated they’d only need AI to help them with the formulas necessary for the math questions (See Appendix C, question 6). In direct contrast most of the AI group, knowing exactly how the AI worked, said they’d prefer not to use the AI again. The AI showed to only reinforce their already existing knowledge and didn’t provide any new insight that helped them.

Theme 7: Pressure to Perform

Only the non-AI group was explicitly asked about pressure to perform to assess whether their results influenced their motivation to do better. A common theme among these participants was their desire to prove that AI was not necessary for student success. Participant 1 specifically stated their goal was to show that human knowledge will always be more powerful than AI knowledge (See Appendix C, Question 7). While the AI group was not directly asked how they felt about performance pressure, they were given a ninth question to complete without AI. All AI participants struggled to answer it, which could suggest there was an internal pressure to prove they had truly learned from the AI-assisted portion of the test.

Discussion

Based on my participants’ responses, there was no clear indication of improved efficiency or understanding in the AI group. Additionally, the average scores of the AI group weren't noticeably higher than those in the non-AI group. According to the literature, AI in education is most effective when used as an Intelligent Tutoring System (ITS) designed to adapt its teaching methods based on the student it is helping (Murphy, R. F., 2019). Some ITS models have shown promise with the ability to personalize learning by adapting to individual students' needs. My results cannot be generalized to all the adolescent students in PBC due to the small sample size. As a result of my research, I have gained a new understanding based on the themes I identified through my thematic analysis. Some of the themes I found, such as student reliance on AI and decreased engagement, align with findings discussed in the literature. My themes can be used in further research by being used quantitatively in a survey, content analysis, or other research method.

Evaluating participant feedback of the AI group demonstrated that Khanmigo wasn’t adapting to typical abilities of an effective ITS. Being an ITS meant that Khanmigo should be able to personalize its content to the user, learning from how they input questions (Murphy, R. F., 2019). Based on the way participants responded, it was clear that Khanmigo wasn't adjusting responses to fit its users preferences. For example, Participant 3 found that Khanmigo would often give them wrong answers, stating, "Over and over again, I just couldn't get an answer from it. It wouldn't help me" (See Appendix ..., Question ...). Similarly, Participant Five stated that Khanmigo did not follow their instructions. Instead of making the responses specific, as it was told too many times, the AI repeatedly gave broad answers that didn't help. This contrasts with the expected role of AI, which should work by adapting to the individual, using their comments to personalize the responses. Instead, Khanmigo showed to function as an automated problem-solver, which follows a one-size-fits-all approach to answers. Further, this raises concerns about how Khanmigo will affect independent learning since students may not receive the support they need. This makes them passive learners who rely on AI-generated responses rather than engaging in deeper learning.

In addition to Khanmigo’s limitations as an ITS, my findings raised concerns about a students’ tendency to rely on AI generated responses. Instead of guiding students towards deeper understanding, Khanmigo was used as a shortcut to obtain answers. Several participants described using the AI as a way to quickly get the answers rather than try to figure it out themselves which indicated a pattern of overreliance on AI. For instance, Participant 4, who scored the lowest out of any participant, stated that Khanmigo's explanations felt like a long riddle they needed to decipher, but couldn't (See Appendix F). This showed that the participant worked past the problem-solving steps by asking the AI for the answer and writing it down without trying to understand how to get there. Participant 4’s approach-using Khanmigo mainly to get answers suggests there may have been a preference to put as minimal mental effort in the assessment as possible since AI could do all their work for them. This aligns with concerns raised in cognitive load theory, where the AI’s lesson on how to answer each question is over-simplified which limits the students' engagement with it (Sepp et al., 2019). This participant's method of using the AI as an answer check shows to encourage a quick-fix learning attempt which limits cognitive effort since they didn't think the entire question through nearly the beginning phases. According to Cognitive Load Theory, effective instruction should reduce unnecessary cognitive demands while still maintaining high levels of engagement (Sepp et al., 2019). Connecting back to participant 4’s response, other participants also stated that the responses they got were overly general and unhelpful, which reduced their motivation to work through on the content independently, pushing them to rely on the AI. This suggests that rather than optimizing cognitive load, Khanmigo reduces the mental effort to the point that it stops participants from having deeper learning. In contrast to the AI users' perceptions when actively working to solve the problems themselves, non-AI users felt they were getting a better understanding of the material. As mentioned by Participant 8, when asked if their knowledge of the content had improved, "Yes, since I need to focus on what each question was asking, I felt that it did help my understanding. Even though they were hard to answer, it did help that I felt stimulated" (See Appendix J). This quote fits with Metacognition–the ability to think about one's own thinking–which is crucial to developing strong problem-solving skills (Columbia University, 2019). Therefore, Participant 8 demonstrates how, for students to grasp a subject more truly, they need to be challenged, as that is when they will recognize the gaps in their knowledge and work to fill them. The themes identified in the study show that when used in education AI must be designed to prevent students from engaging in passive learning. When AI functions more as an answer provider than a tutor, it is more likely to weaken students' problem-solving abilities, reduce engagement, and hinder the development of metacognitive skills. This aligns with the previous research that found AI often fosters dependency rather than deep learning (Wang & Li, 2024). To combat this, future AI models need to be designed in a way that forces students to think critically, reflect on their answers, and actively engage in the learning process. Teachers and administrators must recognize that Khanmigo should be used as a supplemental tool for learning instead of a primary tool, as the results show it doesn’t stimulate deep learning or independent problem-solving in the way direct instruction does. Additionally, incorporating all these features into a model that can produce simple and easily understandable answers enhances students' engagement since they don't need to wait to understand the material.

My thematic analysis showed a new understanding of what themes would arise when looking at participants' responses. The literature supports the conclusion that when being used as an ITS AI is most commonly used in a passive way by students, disengaging them from learning. My themes: Strategies Used, Confidence Levels, Most Challenging Question Problems, Understanding of the Material, Time Management, Would they Use AI again, Pressure to Perform. These themes gave insight into patterns of students based on whether they were testing with or without AI when taking a test. Past research also suggests that a fundamental issue with AI may stem from its reliance on Machine Learning. Since AI depends on user input to generate responses and build understanding, having varying patterns in student performance can lead the AI to default itself to the lowest level of instruction, which is limiting for more advanced students. My findings contribute to the field of study by offering firsthand data on how high school students perceive Khanmigo, which has not been previously studied. My findings showed that Khanmigo's inability to vary its responses based on its student's preferences, stimulate an engaging learning environment, and enhance users' understanding of the material are why it's considered ineffective as an ITS model. Students, teachers, and administrators must be aware of Khanmigo's faults so they can instead seek out other AI models that support active learning and deeper comprehension.

Limitations and Need for Further Research

A key limitation of my study was the lack of generalizability, as my sample size was only eight students. While focusing on high school students in PBC helped keep my research focused, the small and homogenous sample prevented me from exploring the broader applications of the findings. Additionally, most of the participants came from the same school, meaning they likely took many of the same classes and had similar foundational knowledge on each of the questions, which may have influenced their ability to navigate Khanmigo and approach test-taking. Future research should consider expanding the sample pool to include a diverse range of schools that offer the IB Diploma, AP Capstone Diploma, and AICE Diploma. Because each program requires its own set of courses, students may be more proficient in some academic areas than others. This variation would allow AI to be tested over a wider range of questions, allowing a further comprehensive evaluation of whether AI fosters deep engagement in certain questions. Chun-Hung Lin (2021) highlighted the importance of studying students with varying educational backgrounds. She highlighted that College students with different majors used AI in varying ways–either as a learning tool or simply for quick answers.

Another limitation was the participants' perceptions were recorded after only one use of Khanmigo. This meant the results did not reflect how using AI for prolonged periods of time can shape a person’s ability to process information. Therefore, future research should be based on a longitudinal study. That way researchers can track students’ engagement with AI across multiple tests. Then, researchers can effectively determine whether extended use AI fosters meaningful skill development or leads to increased dependence on the AI generated responses.

As a student researcher, my own experiences and familiarity with AI tools like Khanmigo (or similar to Khanmigo) may have influenced the way I interpreted the study’s results. Through the project I strived to remain objective. However, since I have an interest in educational technology the way each question was framed or the analysis of themes could have unintentionally been influenced. That's why to minimize possible bias, interview questions were standardized, all transcripts were coded prior to reviewing the themes, and I remained mindful of my own ideas I thought the results would show. Acknowledging my position as a high school student and AI user is important to understanding the perspective that may have shaped these findings.

References

California Miramar University. (n.d.). 15 Applications of Artificial Intelligence | CMU.

Www.calmu.edu. https://www.calmu.edu/news/applications-of-artificial-intelligence

Chun-Hung Lin, Chih-Chang Yu, Po-Kang Shih, & Leon Yufeng Wu. (2021). STEM-based Artificial Intelligence Learning in General Education for Non-Engineering Undergraduate Students. Educational Technology & Society, 24(3), 224–237. Cooper, A., & Chasan, A. (2024, December 9). Sal Khan wants an AI tutor for every student | 60 Minutes. Cbsnews.com; CBS News.

https://www.cbsnews.com/news/how-khanmigo-works-in-school-classrooms-60-minutes/

Columbia University. (2019, April 22). Metacognition. Columbia CTL.

https://ctl.columbia.edu/resources-and-technology/resources/metacognition/

Deductive Thematic Analysis | Definition & Method. (n.d.). ATLAS.ti.

https://atlasti.com/guides/thematic-analysis/deductive-thematic-analysis

Holm, P. (2024). Impact of digital literacy on academic achievement: Evidence from an online anatomy and physiology course. E-Learning and Digital Media, 22(2).

https://doi.org/10.1177/20427530241232489

Murphy, R. F. (2019). Artificial Intelligence Applications to Support K–12 Teachers and Teaching: A Review of Promising Applications, Opportunities, and Challenges. RAND Corporation.

http://www.jstor.org/stable/resrep19907

Sepp, S., Howard, S. J., Tindall-Ford, S., Agostinho, S., & Paas, F. (2019). Cognitive Load Theory and Human Movement: Towards an Integrated Model of Working Memory. Educational Psychology Review, 31(2), 293–317.

https://doi.org/10.1007/s10648-019-09461-9

Shamini Shetye. (2024). An Evaluation of Khanmigo, a Generative AI Tool, as a Computer-Assisted Language Learning App. Studies in Applied Linguistics and TESOL,

24(1). https://doi.org/10.52214/salt.v24i1.12869

The Benefits of Paper vs. Digital Learning. (2019, August 12). Paris Corporation.

https://pariscorp.com/paper-learning-benefits/

Wang L, Li W. The Impact of AI Usage on University Students' Willingness for Autonomous Learning. Behav Sci (Basel). 2024 Oct 16;14(10):956. doi: 10.3390/bs14100956.

PMID: 39457827; PMCID: PMC11505466.

Xu, Y., Wang, Q., An, Z., Wang, F., Zhang, L., Wu, Y., Dong, F., Qiu, C.-W., Liu, X., Qiu, J., Hua, K., Su, W., Xu, H., Han, Y., Cao, X., Liu, E., Fu, C., Yin, Z., Liu, M., & Roepman, R. (2021). Artificial Intelligence: a Powerful Paradigm for Scientific Research. The Innovation, 2(4). Sciencedirect.

https://doi.org/10.1016/j.xinn.2021.100179

Appendix

A. Interview Questions

What strategies did you use to solve the problems?

How confident were you in your answers, and why?

Which type of problem (e.g., algebra, biology, etc.) was the most challenging for you, and why?

Did you feel that solving these problems helped you understand the material better? Why or why not?

How well do you think you managed your time during the test?

If you could solve the same problems again, would you prefer to use Khanmigo (or continue working without AI)? Why?

(Non AI Group) Did you feel pressured to do better because you wanted to match or exceed the AI group?

(AI Group) Can you do a question from the test without the help?

a. You roll three standard six-sided dice. What is the probability that the product of the three rolls is a multiple of 12?

B. Test Questions

Algebra

a. An expression involving x produces the same result when divided by 3 and when subtracted by 4, then halved. What is x?

Trigonometry

a. There’s a structure that casts a 20-meter-long shadow. If the angle between the shadow and the ground is 35°, how might you determine the height of the structure? (Round to the nearest tenth.)

Pre-Calculus

a. A transformation involving f(x)=2x^2-3x+1 occurs when substituting 2a and a. Find the result of this change.

Probability

a. What is the probability that the product of two rolls of dice is greater than 20?

Chemistry

a. A certain molecule weights 18 grams for every mole of it that exists. If the total mass of this substance in a container is exactly twice this amount, determine the number of units making up the substance in terms of moles.

Physics

a. Imagine a moving object maintains a consistent rate of 15 meters per second for a full 10-second interval. How would you determine the complete linear distance it covers, assuming no external forces slow it down?

Biology

a. Explain how energy flows through a food web, starting with sunlight and ending with a tertiary consumer. Be sure to mention specific roles of producers, primary consumers, and secondary consumers in your explanation.

Earth Science

a. Earth’s surface is constantly changing due to natural processes. Describe two processes, one constructive and one destructive, that shape Earth’s surface over time. Include examples of features formed by each process.